Introduction

The term “pair programming” was originally coined by Kent Beck and refers to a software engineering technique in which, a pair of people sit together on the same desk, side by side, and work on the same task. The process can occur during all development phases, including not only coding but also design, testing, algorithm design, debugging and so on [1](p. 312). The basic setup of pair programming consists of two roles, a driver and a navigator. The driver, is the one who focuses on the task, writes the code or puts down the design. On the other hand, the navigator is responsible for real-time review. Therefore, he or she has to observe the driver and think of strategies for solving any possible defects. The navigator is not as deeply involved as the driver, consequently, he or she can have an objective point of view and think strategically about the direction of the work. As you can imagine, in case the navigator is quite and does not communicate, the pair is considered dysfunctional [1](p. 311). During the pair programming process the driver and the navigator should switch roles. In some cases, it is common for peer rotation to occur among team members.

Benefits and Limitations

Pair programming is considered as a controversial technique for many reasons as it has both benefits and limitations.

Communication

First of all, by applying pair programming the development process, from a solo activity becomes a more social one. This is because, there is constant communication between the participants. The two peers are now “brainstorming partners”. Research suggests [2](p. 51, Pair Programming Chat) that they solve problems a lot faster, and the quality of the work is usually higher because of the ideas flowing.

Additionally, they are “chatting partners” [2](p. 51, Pair Programming Chat). This helps on work effectiveness since the peers are continuously talking, triggering new ideas. In the case where one peer gets stuck, the chatting helps to unstuck them, the same way as when solo programmers talk about their problems out loud. Furthermore, the “pair pressure” [2](p. 53, Fighting Poor Practices) creates the feeling of not wanting to let your partner down. For this reason, a relationship is created between the two partners which sometimes can lead to satisfaction [3](p. 4, Satisfaction). Particularly programmers think that pair programming takes them out of their “comfort zone”, but as research has shown [3](p. 3, Economics) after some time when a relation has been built, programmers en-

joyed pair programming more, and were more confident than when they programmed alone.

Importance of Peer Rotation

As it is already mentioned, communication continuously triggers new ideas to the participants of a pair duo. However, as psychological research shows [2](p. 53, Pair programmers notice more details) when two programmers pair together, the things they notice and fail to notice become more and more similar. Eventually, the benefit from two pairs of eyes becomes negligible. That effect is called, “pair fatigue” and significantly affects the productivity of a pair. As a result, Kent Beck suggests that the pairs should rotate frequently, even twice per day.

Knowledge Sharing

Next, and what I personally consider as the most important aspect, is knowledge sharing. In a big team there is a wide range of expertise between its members. As a result, when putting people to work together, the knowledge is constantly being passed between the participants. This knowledge includes tool usage tips, design, programming insights and overall skills. In addition, since two peers who work together will not have the same prior knowledge, one will spot some insights or defects faster than the other.

Bad Habits

It is a fact that software engineers are used to working alone, especially those who were educated this way. Also, many of them have the idea that by using pair programming they are wasting their time because their peers will slow them down. However, from the psychological field there is what its called “inattentional blindness” [2](p. 52, Pair programmers notice more details). Inattentional blindness suggest that, if we do not know what to look for, we can stare right at it but still miss it, and what we notice depends on what we expect to see. According to that, although some pair programmers will concentrate mostly on identical parts, they might notice different things.

Economics

The most challenging issue of pair programming is the financial one. Although there are many research results [1](p. 314-317) which support the contrary, the software organizations still believe that pair programming will double the development costs, because two programmers are working on one task. As most managers think that by using pair programming they double the code development costs, they reject it’s usage [4](p. 1, intro). Consequently, a solution to that is to inform the management about research and empirical results that show how, why and when pair programming works in order to have a complete overview of the technique before rejecting it. However, from my experience, applying pair programming in a startup setup is a has bad effects in the economics factor, because usually a startup has to “move fast and break things”, owing to that, this kind of companies do not focus so much on the quality of the product that a pair programming process would provide, but more on speed and parallel work. As a result pair programming is not appropriate method to use in such companies structure.

Coordination

Since more that one person needs to work on a single task, they need to coordinate with each other. This is a problem because they have to work at the same time, on the same team and therefore synchronize their schedules. According to research [1](p. 321, Challenges), one way to resolve this issue is to determine the composition of the pair programming duos during the scrum meeting.

Distributed Teams

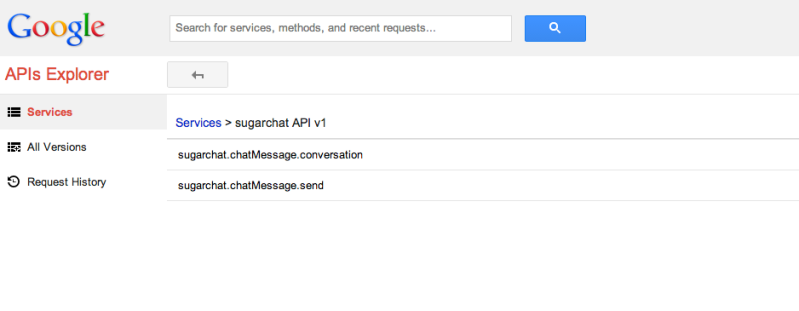

Finally, pair programming by definition implies that the participants have to be colocated. However, pair programming would also work in a distributed team.

Distributed Pair Programming

There is a whole new branch of pair programming that is called “distributed pair programming”. Apparently, these distributed team participants can work on pairs using a variety of tools that are available on the Internet. According to research [1](p. 319 Distributed pair programming), distributed pair programming has also proved to be beneficial, although not as much as the co-located pair programming. More specifically, in the case of students, they viewed pair programming positively in general, but the distributed pair programming significantly less favorably.

Variations

There are some variations to the pair programming setup depending on the experience and the expertise of the two participants.

Expert-Expert

Of course, the obvious choice for the managers would be to pair two experts in the field in order to achieve the highest productivity results. However, this does not always work since both parties consider themselves experts in the field and it is unlikely to question their practices. Due to that, navigator’s feedback may not provide the wishing insights and the process might be unproductive. In some cases only one person keeps the knowledge of a module in the system and thus the team can suffer if this person leaves. However using the “expert-expert” combination could save the day in such cases by increasing knowledge sharing and make it available to more people in the team.

Expert-Novice

This combination is the most suggested one [2](p. 50) since it brings the maximum benefit in both the team and the product. The knowledge sharing in this case is significant, while experts transfers their knowledge to novices and novices can introduce new ideas, as they are more likely to be more critical about the established practices. On the other hand, an expert is more likely to challenge and inspire the novice. However, in this type of pairing, a novice may be passive and hesitate to participate meaningfully in the process and as a result this will lead to an unsuccessful pair.

Novice-Novice

This pair is usually discouraged [3](p. 6, Problem Solving – Learning) since the knowledge sharing is very low and the communication could be proved difficult because both of the participant will take a passive approach to the process. However, when is used in students it shows significantly better results than two novices working independently.

Empirical study

Pair programming, is a widely used practice with numerous studies in both industry and education. The results are presented extensively in [1](p. 313, A History Of Pair Programming), [3](p. 3, Economics), and [4](p. 3, Factors of the economic comparison model) .

Pair Programming In Industry

The research shows [1](p. 313, A History Of Pair Programming), [3](p. 3, Economics), that in industry the products took 4% more time in case of pair programming, although the quality of the code was a lot higher, with 39% fewer defects in user acceptance testing. Also, pair rotation in industry is a very common practice to maintain the pair dynamic and the knowledge flow.

Pair Programming In Education

Pair programming in education has showed very good results since it creates an environment for students which activates learning, enhances social interaction and boosts confidence. Not only that, but they also feel more responsible of the task and they are afraid of jeopardizing their partner’s grade. Therefore, they are more active and articipate a lot more than if they were alone. In the educational setting, the pair rotation is very rare since educators prefer pairs to remain consistent for a whole semester because the overhead of communication could be important.

Conclusion

Although, pair programming results to higher product quality, enhances learning and reduces risk due to improved knowledge management, and, finally, enhances team spirit significantly, it is hard to convince the management to implement it. Therefore, the managers have to invest time to research the subject and give it a shot, since as studies suggest the results are very impressive and this technique becomes more and more popular.

References

[1] Oram, A., and Wilson, G., 2010. Making software: What really works, and why we believe it. ” O’Reilly Media, Inc.”.

[2] Wray, S., 2010. “How pair programming really works”. IEEE software, 27(1), p. 50.

[3] Cockburn, A., and Williams, L., 2000. “The costs and benefits of pair programming”. Extreme programming examined, pp. 223–247.

[4] Williams, L., and Erdogmus, H., 2002. “On the economic feasibility of pair programming”. In International Workshop on Economics-Driven Software Engineering Research EDSER, Vol. 4.